Tencent’s tech team has optimized DeepSeek’s open-source DeepEP communication framework,My Sisters Friend (2019) boosting its performance across different network environments, according to the Chinese AI startup. Testing showed a 100% improvement on RoCE networks and a 30% gain on InfiniBand (IB), offering more efficient solutions for AI model training. On GitHub, DeepSeek acknowledged the Chinese tech giant’s contribution had led to a “huge speedup.” DeepEP is a communication library tailored for a mixture of experts (MoE) and expert parallelism (EP), supporting high-throughput, low-latency GPU kernels and low-precision computing, including FP8. Tencent’s Starlink Networking team identified two main bottlenecks: underutilized dual-port NIC bandwidth and CPU control latency. After targeted optimizations, performance doubled on RoCE and improved by 30% on IB. The enhanced framework is now fully open-source and has been successfully deployed in training Tencent’s Hunyuan large model, demonstrating strong versatility within environments built on Tencent’s Starlink and H20 servers, Chinese tech media outlet iThome reported. [iThome, in Chinese]

Related Articles

2025-06-26 11:02

1738 views

Today's Hurdle hints and answers for April 23, 2025

If you like playing daily word games like Wordle, then Hurdle is a great game to add to your routine

Read More

2025-06-26 10:18

1865 views

Faust and the Risk of Desire by Adam Kirsch

Faustand the Risk of DesireBy Adam KirschMay 12, 2023On MusicFaust and Mephistopheles. Painting by A

Read More

2025-06-26 10:07

2244 views

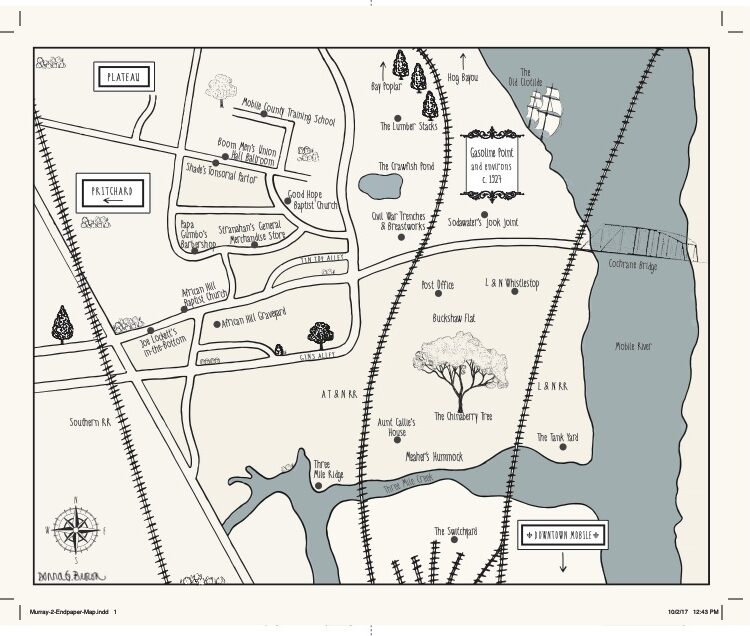

Mapping Africatown: Albert Murray and his Hometown by Nick Tabor and Kern M. Jackson

Mapping Africatown: Albert Murray and his HometownBy Nick Tabor and Kern M. JacksonApril 24, 2023On

Read More